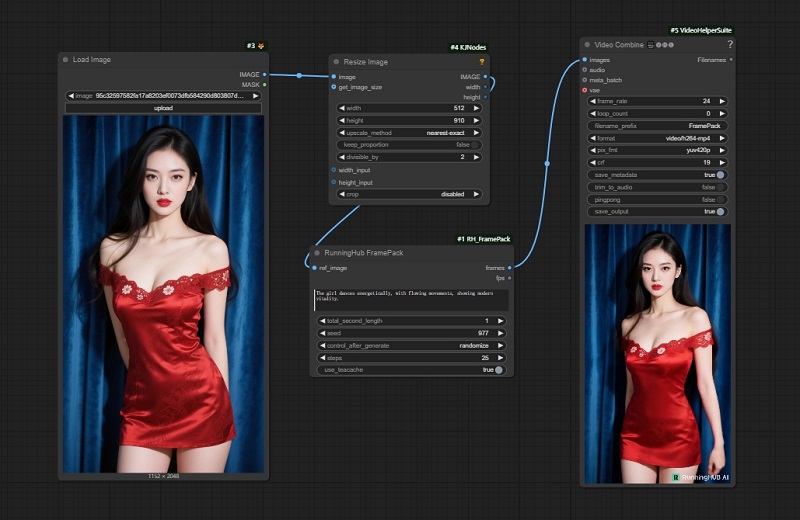

Video Diffusion, But Feels Like Image Diffusion

FramePack is a next-frame prediction neural network structure that generates videos progressively. It compresses input contexts to a constant length making generation workload invariant to video length.

- Process a large number of frames even on laptop GPUs

- Only requires 6GB GPU memory

- Can be trained with a much larger batch size

- Generate 1-minute, 30FPS videos (1800 frames)